I recently discovered some web archiving tools, and I’m using them to provide local archives of some links on this site. For instance, here’s an archive of my ZSA interview. I blogged about this interview back when it happened and included a link to ZSA’s site, but this local archive will stay working even if the ZSA site goes down (not that I expect that to happen).

Creating an archive

There are lots of ways to obtain archives.

I mostly use wget --warc-file and https://archiveweb.page.

wget --warc-file

wget has had WARC support for a few years now.

It is the simplest and by far the fastest way to make an archive.

It can’t always handle very complex dynamic sites and won’t handle web “applications” properly,

and some sites have countermeasures in place that will cause it to fail.

It’s a good option to try first.

I use a command like this:

useragent="Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:121.0) Gecko/20100101 Firefox/121.0"

wget \

--warc-file=example.com-archive \

--page-requisites \

--delete-after \

--no-directories \

--user-agent="$useragent" \

https://example.com

--warc-file=example.com-archive: createexample.com-archive.warc.gzin the current directory--page-requisites: include page requisites like CSS, images, JavaScript, etc; this isn’t always perfect but is frequently good enough--delete-after: keep only the WARC, not separate copies of the html and resources--no-directories: delete the directories it creates too--user-agent=...: use a real browser user agent, because some sites will not serve the actual content to wget’s default user agent- You can pass multiple URLs at the end and they’ll all be included in the archive

archiveweb.page

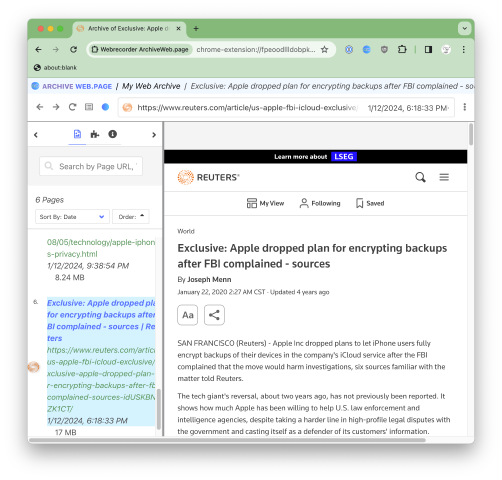

The Chrome extension available at https://archiveweb.page (created by the webrecorder team) can generate the most complete archives. You click a button to start recording, and it creates the archive as you browse, including when interacting with dynamic content, browsing to multiple URLs, using any real cookies or login info in your browser, etc.

Once you’ve finished, an archive is saved in the extension, and you can “download” (actually just copying from the extension’s local storage) the resulting WACZ archive.

The extension includes an archive player based on the same code as replayweb.page too, so you can browse through your archives entirely in the extension, like this:

I primarily use Firefox, but this extension is only available for Chromium browsers. The developer explains why on HN; in theory, FF support would be possible, but it would require rewriting to use FF specific APIs.

Other options

There are lots of other options too, which I have explored less thoroughly than these. Several use a headless Chromium, which allows command-line use (like wget) and a full browser runtime to capture dynamic content (like archiveweb.page), but they can be slow. All methods require manual checking to ensure that the archive you got actually contains the content you want, wasn’t rate limited or blocked, etc.

- Scoop, from Harvard’s https://tools.perma.cc, is an command-line tool and JavaScript library that uses headless Chromium to create archives. Read last year’s announcement post for more background on where it came from and why they built it.

- Browsertrix Crawler, from the https://webrecorder.net team, is a Docker-based headless Chromium command-line tool.

- pywb, also from the https://webrecorder.net team, can both retrieve archives and host an index of them.

- ArchiveBox is an archive server and a set of crawlers, including some that download WARC files, for hosting a local archive.

Detour: keeping archives in git-annex

Archives are often multi-megabyte files. I didn’t want to store them in the git database for performance reasons, but I did want a resilient copy of them, and I need them accessible from my site’s CI.

I settled on using git-annex for this.

I’d probably recommend git-lfs

if your git host supports it

(Github, GitLab, etc all do),

but mine doesn’t, and git-annex can be used with an S3 bucket instead.

Displaying archives with replayweb.page

Another https://webrecorder.net project, https://replayweb.page, can “replay” web archive files, specifically the WARC and WACZ formats.

- It’s self hostable,

and really easy to set up. Add the

replaywebpage NPM package

and use its

<replay-web-page>web component to display the archives. - It’s secure. It prevents the archived page from accessing anything outside of the archive.

- It works on static sites, with no server-side code required.

<replay-web-page> web components support embedding in any page, like an iframe.

So far in my explorations, however, I haven’t found that to be a very nice experience

(also like an iframe).

I opted to make a separate page in my site for each archive file,

and allow it to take up the whole page.

As an example, here’s an archive of my ZSA interview again.

Further reading

- The webrecorder project is also behind the fantastic https://oldweb.today/, which will run an emulated historical operating system and browser and browse saved web pages from the Internet Archive. You can see not only an old version of a web page, but how a contemporary browser would have rendered it, including Flash and Java applets! “Cyberspace, the old-fashioned way” describes the design and how it was built.

- Gwern’s Archiving URLs is required reading for anyone interested in archiving web data.

- My own newly minted archivism tag, also applied to my sadly deprecated twarchive project, which allowed keeping a high fidelity local archive of tweets and Twitter threads but has been broken since Twitter’s v1 API was deprecated.